Table Of Content

- What is Code LLMs?

- LLM Code Tasks

- Foundation Models for Code Generation (Code LLMs)

- What is Code LLM Fine Tuning?

- Code LLMs Fine-Tuning Approaches Tailored for Coding Tasks

- Code LLMs Fine-Tuning Considerations

- Essential DataSets for Code LLMs

- Integration of Code LLM into Development Environments (IDEs) and Coding Tools

- Major Benchmarks of Programming Task Performance

- Code LLM Model Architectures

- The Strategic Role of GPUs in Code LLM Deployment

- Krasamo’s Services for Code LLM Enhancement

- Key Takeaway

- References

In the age of AI, organizations are increasingly turning to AI-assisted technologies to elevate their systems, streamline processes, and drive innovation. This transformation is powered by robust AI capabilities and sophisticated software designed to enhance operational performance and provide a competitive edge.

Integrating generative AI into existing frameworks does require some development effort. Yet, this same technology can significantly streamline the development process, accelerating the creation of cutting-edge solutions while potentially increasing ROI. Developers can use application programming interfaces (APIs) to tap into the power of large language models (LLMs) and unlock the full potential of Natural Language Processing (NLP) in their applications.

LLM code generation is revolutionizing the learning curve for new developers and bolstering their coding proficiency. Integrating LLMs into development environments (IDEs) offers real-time code suggestions, error detection, and context-aware assistance, elevating the overall development experience. It enhances the efficiency and intuitiveness of programming tasks. Leveraging AI to interpret and apply programming language rules, structure, and semantics of code and automated debugging techniques enables faster code generation and more efficient development workflows.

This blog post delves into the essential concepts necessary for understanding LLM code generation, including specialized model versions, code datasets, model fine-tuning, safety benchmarks, and code-related tasks—each a pillar in the foundation of tomorrow’s AI-driven solutions.

What is Code LLMs?

Code large language models (Code LLMs) are specialized large language models that have attracted considerable attention due to their exceptional performance on a wide range of code-related tasks. Unlike general-purpose large language models (LLMs) trained on diverse natural language data, Code LLMs are specifically trained or fine-tuned on programming code and documentation.

This training enables them to understand and generate code, making them highly effective for tasks such as code completion, bug fixing, code generation based on natural language descriptions, and translating code from one programming language to another. The effectiveness of Code LLMs is attributed to their ability to leverage a wealth of professional knowledge through pre-training on a code corpus, which is critical for their improved capability to tackle code-related complexities.

LLM Code Tasks

The exploration and development of Code LLMs (Large Language Models) has advanced significantly, addressing various code-related tasks to streamline and enhance the software development process and LLMOps. The following are the most relevant tasks in which LLMs are exhibiting proficiency:

- Code Generation

Code LLMs like the Code-LlaMA series and GPT-4 have been shown to excel in code generation tasks, creating accurate and functional code snippets from textual descriptions. This capability is crucial for automating programming tasks and accelerating development workflows. - Code Summarization

Code summarization involves creating concise, understandable summaries of code blocks. In this task, models such as CodeT5+ outperform others like GPT-3.5, helping developers quickly grasp the essence of complex codebases. - Code Translation

Code LLMs are adept at translating code from one programming language to another, a task in which GPT-4 performs better. This capability facilitates code reuse across different technology stacks and simplifies the migration of legacy code. - Test Case Generation

Models like GPT-4 and GPT-3.5 (GPT-3.5-turbo) demonstrate superior performance in generating test cases, which is essential for verifying the software’s functionality and robustness. This ability aids in ensuring software reliability and quality through automated test generation. - Vulnerability Repair

Codex has shown promising results in identifying and fixing security vulnerabilities within code. Though data on this task is still limited, automatically repairing vulnerabilities is critical for maintaining the security and integrity of software applications. - Optimization and Refactoring

Code LLMs can automate the optimization of code performance and the refactoring process to adhere to best coding practices. - Generating Documentation

Automatically generating documentation from code is another valuable application of Code LLMs. By understanding the functionality of code snippets, these models can help produce helpful documentation, easing the onboarding process for new developers and improving codebase maintainability. - Debugging

Code LLMs’ ability to suggest fixes for bugs or errors in code can significantly reduce debugging time, making the development process more efficient. This application is particularly useful for identifying and resolving issues in complex software projects.

Foundation Models for Code Generation (Code LLMs)

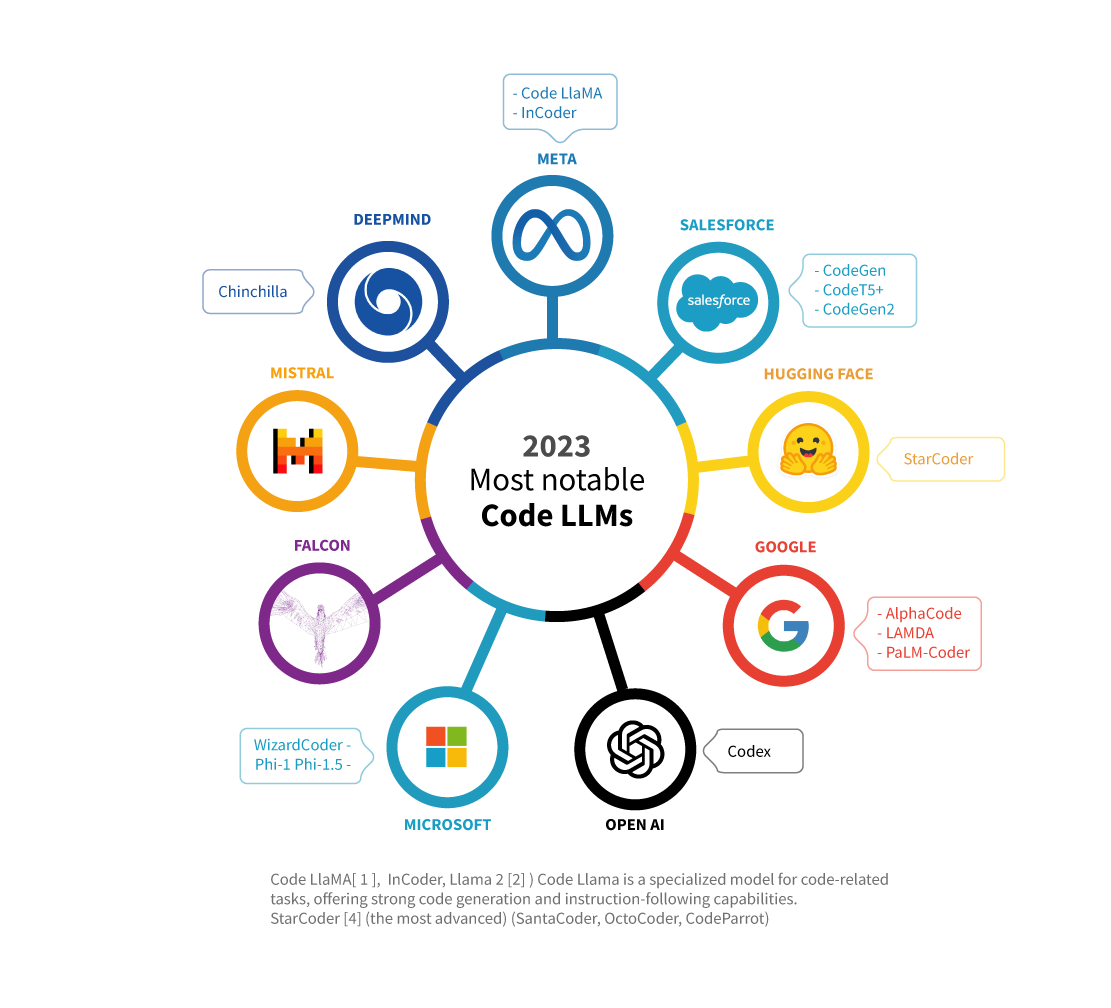

With the revolution of generative AI technologies, more companies are getting involved with Code LLMs and their functionality.

It’s challenging to research the performance of Code LLMs compared to general LLMs. There are many model structures, fine-tuning techniques, and different remarks on the various software engineering tasks a model excels at.

Some LLMs for code generation are trained only on code and then fine-tuned with domain-specific knowledge from a general language model. A foundation model is typically pre-trained on the general-purpose text and code data. Code LLMs outperform general LLMs in software engineering tasks due to their specialized fine-tuning (domain-specific knowledge), enhanced performance, and task-specific proficiency.

How to choose the model? When choosing a model or planning to build your own, analyzing the performance evaluations for different engineering tasks is essential. Software companies adopting LLM code generation are gaining experience and solving challenges faced by current models and their abilities for comprehension, inference, explanation, learning adaptations, and multitasking abilities.

Although many variants of Code LLMs exist, reviewing and selecting the models based on the tasks is essential. Certain Code LLMs perform better than others in tasks such as generating code from natural language descriptions, summarizing code blocks, translating code from one programming language to another, and identifying and suggesting fixes for vulnerabilities in code. The following are the most notable names of Code LLMs as of the end of 2023.

What is Code LLM Fine Tuning?

Code LLMs can be created from scratch or on top of existing LLMs as a base model that provides an understanding of natural language and code built on a vast knowledge repository. If using a pre-trained model, it should be fine-tuned through various techniques to improve outputs applicable to your use case. This specialized fine-tuning leverages domain-specific datasets targeting specific tasks and training objectives, enabling Code LLMs better to understand the nuances of programming languages and coding practices.

Gathering a diverse and high-quality code dataset is essential for training models. It ensures the model is exposed to various coding tasks, languages, and scenarios. This is crucial for developing a robust understanding of programming concepts and generating accurate and useful code completions, fixes, and documentation. This code is the foundational material from which the instruction data for fine-tuning is generated.

Code LLM fine-tuning involves specialized training and refinement steps designed to enhance a pre-trained Large Language Model’s (LLM) ability to understand and generate programming code based on natural language instructions. This process begins with a foundation model with a baseline proficiency in natural language and code derived from its initial training on a diverse general-purpose text and programming code dataset. The fine-tuning process then further specializes this model through different fine-tuning approaches.

Code LLMs Fine-Tuning Approaches Tailored for Coding Tasks

Fine-tuning approaches are tailored to enhance Code LLMs’ performance on specific tasks:

- Infilling and Contextual Understanding: Implement training objectives that allow the model to intelligently fill in missing portions of code by considering the context around the gap and handling large input contexts. This will enable it to manage and reason about extensive codebases effectively.

- Instruction Tuning: Instruction tuning is a process that fine-tunes Large Language Models (LLMs) with a dataset comprising multi-task instructions described in natural language. Instruction tuning aims to align the pre-trained models to better understand and follow human instructions across various tasks. This approach improves the model’s ability to interpret instructions as given in natural language and execute tasks as intended by the user. The core idea is to augment the cross-task generalization capabilities of LLMs, enabling them to perform well on unseen tasks by leveraging the instruction dataset.

- Generalization: Instruction tuning was initially proposed to augment the cross-task generalization capabilities of Language Models (LLMs). By fine-tuning LLMs with diverse NLP task instructions, instruction tuning enhances performance across a multitude of unseen tasks, essentially making the models more versatile and adaptable.

- Alignment: Having learned from many self-supervised tasks at both token and sentence levels, pre-trained models possess the inherent capability to comprehend text inputs. Instruction tuning introduces instruction-level tasks for these pre-trained models, enabling them to extract more information from the instruction beyond the semantics of raw text. This includes understanding user intention, which enhances their interactive abilities with human users, thereby contributing to better alignment between the model’s outputs and user expectations.

- Tasks- Specialized Training: Training on code-specific tasks, including code completion, bug fixes, and generation of new code based on given requirements, leveraging both real-world coding data and documentation. This step ensures the model understands code syntax, semantics, and practical application of programming languages.

- Safety and Bias Considerations: Incorporating safety measures to prevent the generation of unsafe or biased code, ensuring the model behaves responsibly in its coding suggestions and actions.

This fine-tuning process results in a highly specialized Code LLM that excels in various code-related tasks. It offers developers a powerful tool for improving coding efficiency, quality, and collaboration among technical and non-technical team members.

Through these advanced techniques, Code LLMs become more than just coding assistants; they evolve into integral partners in the software development process, capable of contributing to a wide range of programming tasks with increased accuracy and effectiveness.

Code LLMs Fine-Tuning Considerations

Developers must ensure responsible development and deployment, mitigate risks associated with code generation, and fine-tune the models to align with ethical standards and safety requirements.

Use code-specific benchmarks to evaluate the model against its intended use and end-user requirements. This includes assessing the model for cybersecurity safety and the potential generation of insecure code patterns. Evaluate Performance in HumanEval MBPP benchmarks and alternative evaluation methods (metrics). Match the security and robustness qualities of the training code dataset with the output’s security requirements and the systems where the code will be integrated.

Essential DataSets for Code LLMs

Selecting the right code large language model (LLM) for a development team involves careful consideration of the model’s training dataset, among other factors. Compiling and refining datasets is essential to ensuring the efficacy and reliability of Code LLMs across a range of software engineering tasks.

Quality and Diversity of Datasets

A high-quality dataset significantly boosts the performance of Code LLMs, as demonstrated by models like Phi-1 and Phi-1.5, which outperform larger models on various benchmarks despite their smaller size. The essence of data quality cannot be overstated—it encompasses not only the syntactical and semantic correctness of the code but also the inclusion of diverse programming tasks, languages, and environments.

Domain-Specific Knowledge

Incorporating domain-specific knowledge into the training data, such as specific programming languages, libraries, and frameworks, is crucial. This ensures that the selected Code LLM is tailored to the real-world needs of end-users and capable of handling specific software engineering tasks effectively. The attention to specialized datasets, like those focusing on Python programming or data science tasks with Jupyter notebooks, enables models to grasp the nuanced requirements of different domains.

Safety and Reliability

Ensuring the dataset is free from harmful or malicious code is paramount for maintaining the safety and reliability of Code LLMs. This involves rigorous screening and filtering processes during dataset compilation to prevent the propagation of vulnerabilities or biases within generated code.

Comprehensiveness

A comprehensive dataset enriched with various code snippets, documentation, and discussions about code empowers Code LLMs better to understand the context and purpose behind coding tasks. This includes integrating natural language data that discusses code, offering insights into how programmers think and solve problems, thereby enhancing the model’s ability to generate meaningful and contextually relevant code outputs.

Public and Specialized Sources

Leveraging publicly available code from platforms like GitHub and specialized datasets (open repositories) such as The Pile, BigQuery, and others enriches the training data with real-world code examples and challenges. This exposes Code LLMs to various coding styles, practices, and problem-solving approaches, making them versatile and adaptable to various programming scenarios.

Instruction Tuning Dataset

An instruction-tuning dataset is crafted to enhance an LLM’s ability to follow explicit programming instructions in natural language. It consists of pairs of natural language instructions and the corresponding code outcomes. This dataset trains the model to interpret and execute complex coding tasks as specified by human-readable commands, making it adept at translating user intentions into functional code. Instruction-tuning datasets enable models like Code Llama – Instruct, which are fine-tuned to generate helpful and accurate code responses based on user prompts, demonstrating improved understanding and execution of specified tasks.

Self-Instruct Dataset

The self-instruct dataset focuses on aligning the model with self-generated instructions, pushing the boundaries of autonomy in code generation. By leveraging such datasets, models are trained to follow external instructions and generate instructions for completing tasks. This approach fosters a deeper model understanding of coding tasks and solutions, enabling it to propose steps or processes in code creation or debugging autonomously. It exemplifies a more advanced level of instruction-based fine-tuning, where models, through self-instruction, refine their capability to navigate and solve coding problems with minimal external input.

Integration of Code LLM into Development Environments (IDEs) and Coding Tools

Large Language Models (LLMs) tailored for coding tasks can integrate with Development Environments (IDEs) to enhance the coding process, making it more efficient, intuitive, and user-friendly for developers across various experience levels.

Integrating Code LLMs into IDEs represents a significant advancement in software development. As these models continue to evolve, their integration into development environments is poised to become an indispensable asset for developers worldwide, reshaping the future of software development. Learn more about installing the VS Code extension (llm-vscode).

Code LLMs also integrate with platforms such as Azure AI Studio and Vertex AI to orchestrate LLMs and build generative AI applications. There is also integration with GitHub CodeSpaces, Semantic Kernel, LangChain, and Hugging Face Transformers Library.

- Infilling Capability: Code LLMs, especially Llama variants, are designed with an infilling objective. This feature enables the models to complete or generate code based on the context surrounding a cursor’s position within an IDE. This capability allows developers to write code faster and with less effort, as the model can intelligently predict and insert necessary code snippets, methods, or even entire blocks of code based on partial inputs and the surrounding code context.

- Support for Large Input Contexts: Code LLMs are engineered to comprehend and process large input contexts, significantly larger than the standard input lengths supported by typical LLMs. This ability is crucial for working within IDEs, where developers might deal with extensive codebases. Code LLMs can understand and keep track of a project’s broader context, enabling them to provide more accurate suggestions and completions consistent with the entire codebase, not just the snippet currently being edited.

- Real-time Completion and Suggestions in Source Code Editors: Integrating Code LLMs into IDEs allows for real-time code completion and suggestions. As developers type, the model can offer syntactically and semantically correct code completions, suggest fixes for bugs identified in real-time, and even generate docstrings for functions and methods, enhancing code quality and speeding up the development process.

- Enhanced Documentation and Debugging: Beyond code generation and completion, the model’s understanding of code and natural language enables it to assist with documentation and debugging within IDEs. It can automatically generate comments and documentation based on code functionality, helping maintain clean and understandable codebases. Moreover, it can suggest potential bug fixes by understanding error messages and the current code context.

Major Benchmarks of Programming Task Performance

In the rapidly evolving software development landscape, code large language models (Code LLMs) have emerged as transformative tools. They bolster programming tasks by generating, summarizing, translating, and optimizing code.

To navigate LLM code generation, developers rely on a suite of benchmark metrics to evaluate the performance and capabilities of Code LLMs across diverse programming challenges. This section delves into the pivotal benchmarks shaping the assessment of Code LLMs, underscoring their significance in refining model accuracy and utility.

HumanEval

HumanEval stands as a cornerstone benchmark for appraising code generation proficiency. Synthesizing programs from docstrings provides a rigorous framework to gauge a model’s ability to interpret and materialize complex programming concepts from concise descriptions. This benchmark measures raw generation capability and tests for a model’s comprehension of nuanced programming languages.

Expansive Benchmarks for Comprehensive Assessment

- MBPP & APPS: These benchmarks extend the evaluation horizon by introducing various programming problems, offering a broader canvas to test code generation and logical reasoning skills of Code LLMs.

- GSM8K & DS1000: Focused on real-world scenarios and data science challenges, these benchmarks scrutinize models’ abilities to navigate practical tasks, highlighting their adaptability and problem-solving acumen.

- MultiPL-E: A multi-lingual evaluation framework, MultiPL-E accentuates a model’s versatility and proficiency across different programming languages, an essential attribute in the global coding ecosystem.

CodeBLEU: A Metric of Syntactic and Semantic Fidelity

CodeBLEU transcends traditional evaluation by amalgamating syntactic correctness with semantic similarity, offering a nuanced perspective on the alignment between generated code and reference standards. This metric epitomizes the balance between form and functionality, championing models that generate syntactically accurate code and preserve the intended semantics.

Pass@k: Benchmarking Functional Correctness

Pass@k emerges as a critical metric, emphasizing the code’s functional integrity. Assessing whether the top-k generated solutions fulfill the specified test cases underscores the practical viability of generated code, prioritizing models that deliver solutions ready for real-world applications.

CodeXGLUE: The Epitome of Model Versatility

CodeXGLUE encapsulates a comprehensive suite of tasks, including code completion, summarization, and translation, offering a panoramic view of a model’s capabilities. This benchmark celebrates the multifaceted nature of Code LLMs, spotlighting models that excel across a spectrum of software engineering domains.

The meticulous selection and application of these benchmark metrics illuminate the path toward developing Code LLMs that proficiently generate, summarize, and translate code aligned with the nuanced demands of software engineering tasks. By leveraging these benchmarks, developers, and researchers can sculpt Code LLMs into more refined, versatile, and practical tools, ultimately propelling the frontier of AI-driven software development.

Learn more about the Generative AI Landscape and Tech Stack

Code LLM Model Architectures

The architectural diversity among Code LLMs underscores the importance of considering model architecture in the selection process for development teams. Each architecture offers distinct advantages, whether it be the Transformer’s all-around proficiency, the nuanced understanding and generation capabilities of Encoder-Decoder models, the focused generative power of Decoder-Only Transformers, or the specialized task handling of Mixture of Experts models.

Selecting the right Code LLM architecture involves aligning the model’s strengths with the development team’s specific coding tasks and challenges, ensuring the most efficient and effective coding assistance.

Transformer Architecture

The Transformer architecture, foundational to LLM development, is celebrated for its exceptional ability to process sequential data and its scalable design. It serves as the backbone for numerous Code LLMs, enabling sophisticated understanding and generation of programming code by leveraging self-attention mechanisms to weigh the importance of different parts of the input data.

Encoder-Decoder Models

Models adopting the Encoder-Decoder framework, such as AlphaCode, CodeT5, CodeRL, and CodeGen, excel in tasks that involve translating between different languages or modalities. This architecture is adept at understanding the context of the input (encoder) and generating the corresponding output (decoder), making it highly effective for code translation and summarization tasks. These models underscore the flexibility of Code LLMs in adapting to varied software engineering requirements by effectively capturing and translating the intricate nuances of programming languages.

Decoder-Only Transformer

Decoder-only transformer models like LaMDA, PaLM, and StarCoder are engineered to produce coherent and contextually relevant outputs (favoring generation tasks). This architecture is distinguished by its focus on generation without the encoding step, optimizing it for creating programming code directly from natural language prompts or completing code snippets. The proficiency of these models in generating high-quality code highlights their utility in automating coding tasks, enhancing developer productivity, and fostering creative coding solutions.

Mixture of Experts (MoE)

The Mixture of Experts (MoE) architecture introduces a paradigm shift by incorporating multiple specialized sub-models, each adept at handling distinct types of inputs or tasks. This approach allows the overall model to excel across a broader spectrum of software engineering tasks by dynamically selecting the most relevant ‘expert’ for a given input. MoE models demonstrate the potential of Code LLMs to leverage specialized knowledge effectively, ensuring high performance and adaptability to complex coding challenges.

The Strategic Role of GPUs in Code LLM Deployment

The deployment of Code (LLMs) is reshaping software development, requiring robust computational infrastructure. These models’ expansive nature and intricate operations demand significant GPU and hardware resources, making traditional fine-tuning approaches resource-intensive. This introductory brief will explore the hardware foundations necessary to harness the full potential of Code LLMs, from the might of GPUs to the efficiencies brought by cloud computing platforms.

GPU Requirements: Code LLMs, especially state-of-the-art models with billions of parameters, necessitate powerful GPUs for training and inference. The high computational load required for processing extensive datasets and performing the numerous matrix multiplications inherent in machine learning operations makes using advanced GPUs essential.

Parallel Computing Capabilities: Hardware with robust parallel computing capabilities is required to manage the intense demands of Code LLMs. GPUs are particularly well-suited for this purpose, as they can handle multiple operations simultaneously, which is crucial for efficient training and functioning for these models.

Memory and Storage: Adequate memory is crucial for handling large-scale datasets and training Code LLMs. Moreover, the hardware needs to provide sufficient storage to house the datasets and the multiple versions of the model that evolve during the training process.

Infrastructure Optimization: infrastructure optimization is essential in improving model training efficiency. Hardware manufacturers are continually advancing GPU architecture to offer better performance for AI and machine learning workloads, which benefits the operation of Code LLMs. This is evident in models’ support from major hardware platforms like AMD, Intel, Nvidia, and Google, which enhance performance through hardware and software optimizations.

Cloud Platforms and Managed Services: Many organizations require a significant hardware investment for Code LLMs. Cloud platforms and managed services provide access to the necessary hardware resources without requiring upfront capital investment. For instance, the availability of Llama models on platforms like AWS and Google Cloud allows businesses of all sizes to leverage powerful computing resources and managed APIs to use these models without managing the underlying hardware infrastructure.

GPUs and specialized hardware are critical components of the ecosystem supporting Code LLMs. The continual advancements in hardware, coupled with cloud-based solutions, are lowering the barriers to entry, enabling more widespread use and experimentation with Code LLMs across various industries.

Krasamo’s Services for Code LLM Enhancement

Krasamo is at the forefront of accelerating AI integration and crafting sophisticated software products offering tailored services for enhancing Code LLMs:

- Custom Development: Customized Code LLMs fine-tuning to meet the precise demands of diverse applications, ensuring a perfect fit for enterprise-specific requirements.

- Optimization: Our team expertly refines models to unlock peak performance, giving enterprises the edge they need in a competitive marketplace.

- Integration Solutions: We create and deploy tools and plugins that combine with development environments, enhancing productivity with minimal disruption.

- Consultancy in Infrastructure: Provides expert consulting on infrastructure and hardware selection, ensuring your Code LLMs operate on optimal platforms.

- Performance Analytics: Build tools to deliver critical performance insights, empowering businesses to make data-driven decisions about their AI models.

- Cloud Computing: Provide scalability and processing power with robust cloud services designed for intensive workloads.

Key Takeaway

Businesses should strategically implement Code LLMs, considering their specific use cases and the potential impact on development processes and product offerings. It is a fact that code LLMs typically outperform general LLMs in software engineering tasks. This superior performance is largely due to the targeted fine-tuning and domain-specific training that Code LLMs undergo, enabling them better to address the unique challenges and requirements of software engineering.

For businesses planning to implement Code LLMs for their development teams, this information underscores the value of selecting task-specific Code LLMs to leverage their enhanced capabilities for improved coding efficiency, quality, and innovation. Adhering to best practices for responsible AI development is crucial, including ensuring safety, fairness, and transparency. Code LLMs can significantly enhance coding efficiency, quality, and innovation within development teams.

However, it’s essential to navigate this process thoughtfully, prioritizing responsible development practices to harness the full potential of these technologies while mitigating associated risks.

References

[1] Code Llama: Open Foundation Models for Code

[2] Llama 2: Open Foundation and Fine-Tuned Chat Models

[3] A Survey of Large Language Models for Code: Evolution, Benchmarking, and Future Trends

[4] StarCoder: may the source be with you!

[5] WizardCoder: Empowering Code Large Language Models with Evol-Instruct