We have seen a rapid expansion of generative AI apps in image generation, copywriting, code writing, and speech recognition categories. They are based on large language models (LLMs) or image generation models using proprietary and open-source models.

Generative AI is in the early stages of development, with players needing more differentiation and user retention, so it is unclear how these generative AI applications will generate business value. But as they advance with technical capabilities, some will successfully emerge and consolidate their AI end products.

Enterprises with established business models, large customer bases, and organized data are adopting generative AI to enhance their current end-user applications and improve their processes quickly. There are several approaches to incorporating ai functionality into their existing products and services or startups with innovative solutions.

Companies adopting generative AI apps are raising the standard by improving their operational performance and building advanced products and services.

This paper discusses generative AI concepts and details how the technology works, the tech stack, and other business insights for clients working on their AI Development paths.

What is Generative AI?

Generative AI is a type of AI that uses machine learning to learn from existing data and generates similar new content from the trained data. It generates new data (outputs) with similar characteristics and works with text, images, and audio.

These applications typically use machine learning algorithms to learn patterns and features from existing data to create new, unique, and similarly structured data.

Building Generative AI Apps

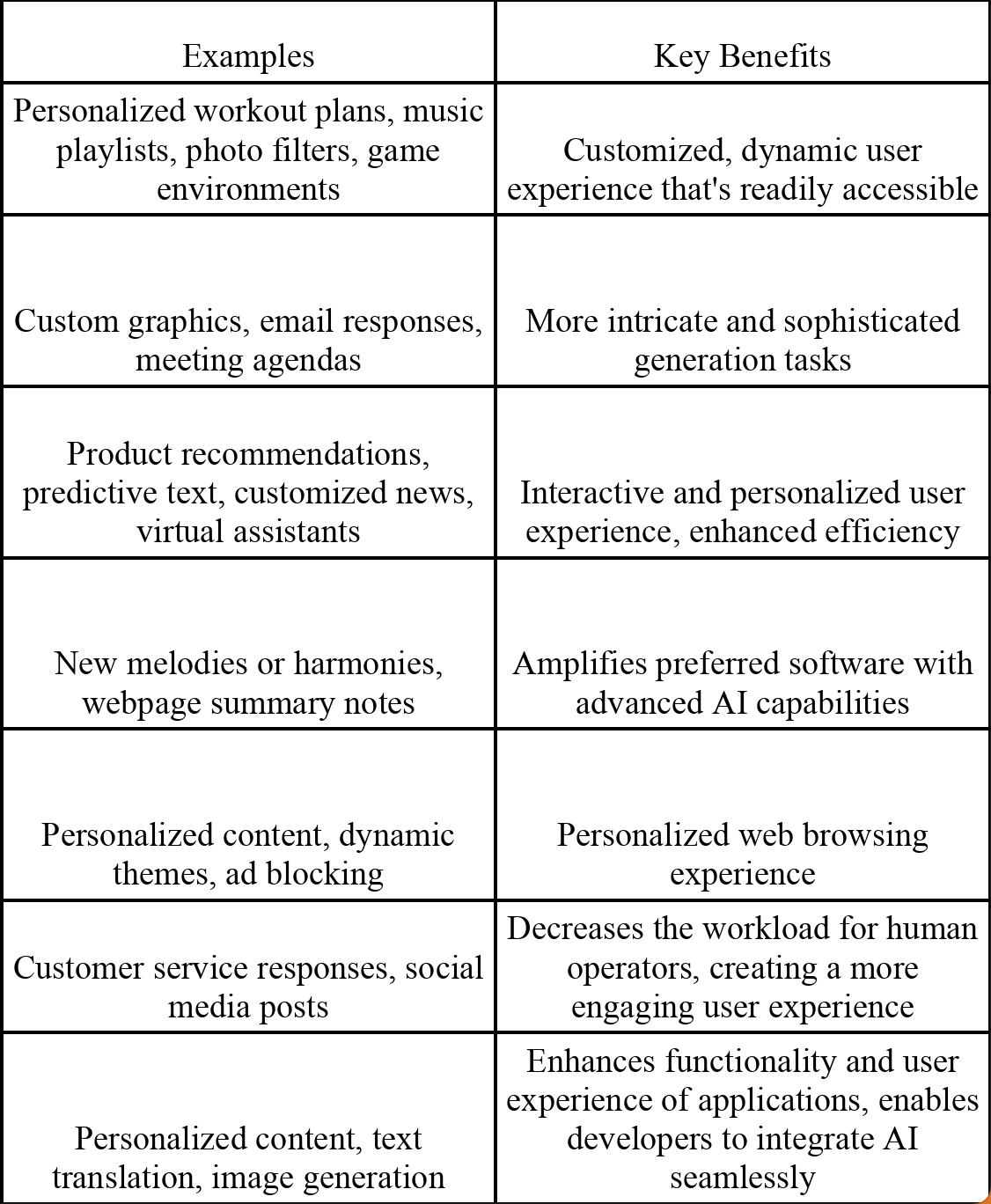

Generative AI technologies, such as language models, open the door to creating applications that can significantly enhance functionality and user experience across various platforms.

Using generative AI to build apps is about integrating generative AI models into the app’s functionality, often through API calls to these models. This integration can aid in personalizing content, automating responses, or generating new creative content.

Business models are evolving with AI-enhanced apps such as mobile apps, desktop apps, web apps, plugins, extensions, ai bots, and APIs.

When discussing generative ai models, we may refer to models that generate content in various formats like text, images, or audio. But if we talk about large language models (LLMs), we mean a specific functionality that generates human-like text. LLMs are a specific type of generative ai model.

Types of Generative AI Applications

Generative AI applications can generate various outputs, including text, images, audio, video, and 3D models. Each type of application represents a unique application of generative AI techniques, each bringing distinct potential benefits and challenges.

- Text Generation Apps: These applications generate human-like text. They are widely used in various fields, including creative writing, journalism, content marketing, customer support (in chatbots), etc. Apps based on models like GPT-3 and GPT-4 fall into this category.

- Image Generation Apps: These applications generate realistic images. They can be used to create art, design elements, or even to generate fake but plausible images. Generative Adversarial Networks (GANs) are often used in these applications. For example, transforming the style of an image, changing the time of the day, etc.

- Music and Audio Generation Apps: These applications can generate music or other forms of audio content. They can create background scores, jingles, or sound effects for various purposes. OpenAI’s MuseNet is an example of an app capable of composing music in different styles.

- 3D Model Generation Apps: These applications generate 3D models that are especially useful in fields like video game development, architectural design, or virtual reality.

- Video Generation Apps: These applications can generate new video clips. They are still at an early stage of development, but they have the potential to revolutionize fields like film, advertising, and social media.

- Data Augmentation Apps: These applications generate synthetic data to augment existing datasets. This can be particularly useful when data is limited and expensive to collect. For example, patient data in healthcare can be used for research without compromising privacy.

- Style Transfer Apps: These applications apply the style of one data set (e.g., an artist’s painting style) to another (e.g., a photograph). This allows for a lot of creativity and customization.

Types of Generative AI Models

The following are the most modern types of neural networks currently used for generating high-quality results.

- Generative Adversarial Networks (GANs): GANs are a generative AI model that uses two neural networks to compete against each other. One network, the generator, tries to create new indistinguishable content from real data. The other network, the discriminator, tries to distinguish between real and fake data.

- Variational Autoencoders (VAEs): VAEs are a generative AI model that uses a neural network to encode data into a latent space and then decode it back into new data.

- Transformers: Transformers are a type of neural network architecture that is very effective for generative AI tasks. Transformers are often used in LLMs.

Some examples of large language models include:

- GPT-3: GPT-3 is a large language model developed by OpenAI. GPT-3 has been trained on a massive dataset of text and code, and it can generate text, translate languages, and answer questions in an informative way.

- Bard: Bard is a large language model developed by Google AI. Bard is similar to GPT-3, but it has been trained on a different dataset of text and code.

Generative AI Tech Stack

Apps (end users) Without Proprietary Models

End-user-facing generative AI applications are designed to interact with end-users, leveraging generative AI models to create new content (text, images, audio) or solutions based on user input. These applications don’t have to develop or own the AI models they use; they often depend on third-party APIs or services.

In this competitive landscape, many products utilize similar AI models. This leads to an emerging market dynamic where players try to differentiate through specialized applications, unique features, improved workflows, and integrations with existing applications used by businesses or end-users.

Several of these applications have rapidly entered the market using AI models as a service, effectively bypassing the costs associated with training data on proprietary models. As a result, many of these applications are not fully vertically integrated, as the ownership of the AI models remains with third-party service providers.

For example, some applications, such as writing assistants or chatbots, primarily use GPT or Bert, even if their core offering is not the AI component. These usually access the foundation model via the API, which means they are not end-to-end solutions.

Many applications do not publicly disclose their reliance on third-party models or APIs, while others use a combination of proprietary and open-source models. Since the generative ai landscape is changing so fast, we are not mentioning any specific apps, as by the time we publish this article, they might change their language model.

Proprietary or Closed Source Foundation Models (Pre-trained)

“Foundation models” have gained prominence in the AI sphere as large-scale machine learning models, trained on extensive data, that can be fine-tuned for diverse downstream tasks. These models are the building blocks from which numerous applications and models can be created, with OpenAI’s GPT, Google’s BART, and PaLM 2 as prime examples.

Notably, these models fall under the “Closed Source” category, implying that while they can be accessed and used via APIs, their core code, specific training data, and process details are not public. This measure prevents misuse, safeguards intellectual property, and manages the resources required for such extensive model releases.

For instance, using OpenAI’s GPT entails making API calls where a prompt is sent, and a generated text is returned. Users leverage the trained model without having access to or the ability to alter the code used for its training or the specific data on which it was trained.

The benefits of using closed-source foundation models are their high accuracy, the production of high-quality content, scalability to meet the needs of many users, and security against unauthorized access. However, they present challenges too. For example, their development and maintenance can be costly, and there can be bias based on the training data.

Closed-source foundation models also extend to image generation, as demonstrated by DALL-E and Imagen. Both are trained on datasets of images and text to create realistic images from text descriptions. Despite challenges, these closed-source foundation models provide immense benefits, including accuracy, scalability, and security, signaling their immense potential in AI.

Closed source foundation models can be connected with APIs directly, which is efficient but potentially expensive; indirect connection via third-party services, which can be less expensive but less efficient; and hybrid connections, which combine both methods for optimal efficiency and cost-effectiveness. In a future post, we will discuss the benefit/cost analysis of connecting to proprietary models. Stay tuned.

Build a Proprietary Model

Businesses that lack AI skills can hire a software developer, such as Krasamo, to build a proprietary ML model. This model would be trained using the client’s data and tailored to their needs. Furthermore, developers have numerous opportunities to initially create models using third-party data and customize them with the client’s data once they secure a contract. Many industries, such as financial or healthcare, must build closed models to preserve data privacy, accuracy, and control.

Models Hub Platforms

Model Hubs serve as centralized platforms for hosting, sharing, and accessing pre-trained machine learning models, and they have proven instrumental in the context of generative AI. They are designed to foster collaboration, disseminate knowledge, and propel advancements in the field, offering various models that can be employed for various tasks, including text generation, image generation, and question answering.

These hubs provide easy access to a broad range of pre-trained models, ready for immediate use, significantly reducing the time and resources required to operate a model. Their interfaces allow users to conveniently search for models based on criteria such as task or language, ensuring an efficient user experience. They are designed to scale and meet the needs of many users, ensuring reliable performance. Moreover, they often adhere to stringent security measures to protect user data.

Model Hubs also bolster community collaboration. By facilitating the sharing of models within a shared space, they foster a sense of community where developers can learn from each other and collaborate on enhancing existing models or creating new ones.

However, while Model Hubs offer numerous benefits, they also present certain challenges. Depending on the data they were trained on, these models can introduce bias, warranting awareness of the potential for bias when utilizing a Model Hub.

Hugging Face Model Hub and Replicate are two leading platforms for hosting and sharing pre-trained models, catering to various tasks, including natural language processing, image classification, and speech recognition.

Hugging Face Model Hub is a specialized platform that houses many models focusing on natural language processing tasks. The platform is popular for sharing and utilizing Transformer models, a neural network particularly effective for natural language processing tasks.

On the other hand, Replicate is a versatile Model Hub that enables developers to share, discover, and reproduce machine learning projects and run machine learning models in the cloud without having to set up any servers (from your code). Despite being newer than Hugging Face Hub, it has been growing rapidly, offering several features that make it an excellent choice for sharing and using pre-trained models.

Open-Source Foundation Models

Open-source foundation models are large-scale machine learning-trained models that are publicly accessible. They offer free access to their codebase, architecture, and often even model weights from training (under specific licensing terms).

Developed by various research teams, these models provide a platform for anyone to adapt and build apps with generative ai capabilities on top of them. Open-source foundational models are instrumental in product development and service innovations that foster an innovative and diverse AI research environment.

These foundational models undergo pre-training on enormous datasets encompassing text, code, and images. This extensive training process equips these models to comprehend and reproduce various language patterns, structures, and information. Upon completion of the training, these models can generate novel content in multiple formats, including text, images, and music.

Some of the most prominent open-source foundational models are listed below. Please visit their respective web pages for the most recent information about these models:

End-to-end apps (end–user-facing applications with proprietary models)

End-to-end applications in the realm of generative AI are comprehensive software solutions that employ generative models to provide specific services to end users. Such applications typically include proprietary models a particular company has developed and owns. They encapsulate these models within a user-friendly interface, concealing the intricate technicalities of the underlying AI.

The term “end-to-end” signifies that the application manages all process aspects, from the initial data input to the final output or action. This is especially pertinent to generative AI, where applications can take user inputs, process them via a proprietary AI model, and deliver an output within a single, seamless application.

Platforms like Midjourney and Runway ML exemplify tools that enable the creation of end-to-end applications utilizing proprietary models in the generative AI context. Midjourney empowers developers to construct, deploy, and scale AI applications, offering them tools to leverage AI technologies without necessarily being machine learning or data science experts. Developers can create end-to-end applications through Midjourney that utilize proprietary models to process user inputs and deliver generated outputs directly to the user.

Although the platform supports a variety of AI technologies, in the context of generative AI, it could be used to construct applications like an AI-powered design tool, an automatic content generator, or a predictive text application.

All these applications are considered end-to-end as they run the entire model pipeline from acquiring the user’s input, processing it with a proprietary AI model, and delivering the generated output back to the user.

Runway ML is a creative toolkit driven by machine learning, aiming to provide access to machine learning for creators from diverse backgrounds, such as artists, designers, filmmakers, and more. The platform offers an intuitive interface that lets users experiment with pre-trained models and machine-learning techniques without needing extensive technical knowledge or programming skills.

For example, in the scope of end-to-end applications, a user could employ Runway ML to build a generative art project, where the user provides a source image or a set of parameters, and the application generates an art piece based on that input. This entire process is managed within Runway ML’s interface, forming an end-to-end application for creating generative art.

End-to-end apps using proprietary generative AI models present numerous benefits. They are easy to use, providing user-friendly interfaces for content generation. They are often affordable or free, scalable to accommodate many users and incorporate strong security measures for user data protection.

However, there are challenges as well. These applications may exhibit bias, depending on the data they were trained on, and there could be privacy concerns as these apps may collect and use user data in ways unknown to users. The generated output may only sometimes be accurate, depending on the task at hand. Additionally, these applications may not match human creativity levels and fail to generate original content. As generative AI technology evolves, we can anticipate even more innovative and exciting applications.

AI Models Training and Inference of Workloads in the Cloud

Generative AI models are developed to generate new content based on the patterns they learn from vast training datasets. However, given the size and complexity of these datasets, the process of training generative AI models is both computationally intensive and storage demanding.

To overcome these challenges, AI engineers leverage the power of cloud computing platforms, which provide the necessary resources without substantial investment in local hardware.

A range of cloud computing platforms facilitates the training and deployment of generative AI models. Each platform has a suite of services tailored for AI applications. For instance, AWS’s SageMaker, Azure’s Machine Learning Studio, and Google Vertex AI provide managed environments for effectively training and deploying machine learning models.

The following steps form the typical machine learning pipeline for training and deploying a generative AI model on a cloud platform:

- First, choose a cloud computing platform.

- Then, set up a development environment.

- Prepare the data.

- Train the model.

- Deploy the model.

Cloud-based infrastructure offers multiple advantages for running model training and inference workloads:

- Scalability: Cloud platforms can instantly scale resources to meet the demands of large datasets and intense workloads, optimizing cost and efficiency.

- Parallelism: Cloud infrastructure supports concurrent processing, allowing multiple training or inference tasks to run simultaneously, speeding up overall processes, and facilitating efficient hyperparameter tuning.

- Storage and Data Management: Cloud platforms provide robust storage solutions and data management services, simplifying tasks like data cleaning, transformation, and secure storage.

- Accessibility: These platforms are accessible from anywhere, encouraging global collaboration and providing access to cutting-edge hardware like GPUs and TPUs.

- Managed Services: Many cloud platforms provide managed AI services, abstracting away the details of infrastructure management and allowing developers to concentrate on crafting and refining their AI models.

Once trained, models are ready for inference – generating predictions based on new data. Cloud platforms offer services that host the model, provide an API for applications, ensure scalable handling of multiple requests, and allow for monitoring and updates as needed.

GPUs TPUs—Accelerator Chips

In generative AI, the selection of computing hardware is a critical factor that can greatly influence the efficiency of model training and inference workloads. Key hardware components in this context include GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units). These specialized chips are designed to accelerate the training and inference of machine learning models, with GPUs having a more general-purpose application and TPUs explicitly crafted for machine learning tasks.

Initially designed for rapidly rendering images and videos, GPUs, primarily for gaming applications, have been well-suited for the calculations necessary for training machine learning models. They can perform many operations simultaneously due to their design which supports a high degree of parallelism. This is particularly beneficial for generative AI models, which often deal with large amounts of data and require complex computations. In these models, GPUs can execute typical operations like matrix multiplication concurrently, resulting in a significantly faster training process than a traditional CPU (Central Processing Unit).

On the other hand, Tensor Processing Units (TPUs), a type of processor developed by Google, are built to expedite machine learning workloads. They excel in accelerating tensor operations, a key component of many machine learning algorithms. TPUs possess a large amount of on-chip memory and high memory bandwidth, which allows them to handle large volumes of data more efficiently. As a result, they are especially proficient in deep learning tasks, often outperforming GPUs in managing complex computations.

Given these hardware capabilities, when planning to build generative AI applications, some key aspects to consider include:

- Dataset Size and Complexity: The size and complexity of the dataset will determine the necessary computing power required to train the model.

- Model Type: The model type will also impact the computing power required. For instance, recurrent neural networks (RNNs) are usually more computationally demanding to train than convolutional neural networks (CNNs).

- Desired Accuracy: Higher accuracy typically requires more training data and computing power.

- Performance Requirements: The computational requirements of your model dictate the choice between CPUs, GPUs, and TPUs. Generative models, such as GANs (Generative Adversarial Networks), often demand a lot of computational power, and using GPUs or TPUs can significantly accelerate the training process.

- Cost: While GPUs and TPUs can be costly, they may reduce the training time of your models, potentially leading to long-term cost savings. Balancing the initial cost of these units against their potential to expedite your development process and reduce costs over time is crucial.

- Ease of Use: Certain machine learning frameworks facilitate using GPUs or TPUs. For example, TensorFlow, developed by Google, supports both. When selecting your hardware, the ease of integrating it with your chosen software stack should be considered.

- Scalability: As your application expands, you may need to augment your computational resources. GPUs and TPUs support distributed computing, enabling you to utilize multiple concurrent units to process larger models or datasets.

- Energy Efficiency: The energy consumption of training machine learning models can be high, which may lead to substantial costs and environmental impacts. TPUs, designed for energy efficiency, could be advantageous if extensive training is planned.

Lastly, selecting compute hardware is one aspect of building a generative AI application. Other considerations include the choice of your machine learning framework, data pipeline, and model architecture, among other factors. Also, remember to factor in the cost, availability, and expertise required to compute hardware effectively, as these elements can also impact the successful implementation of generative AI apps.

Key Takeaway

Building a generative AI doesn’t require owning a foundational model or being vertically integrated. Getting started can be as simple as creating a generative AI app using available models, which can set the stage for future development.

Businesses can initially leverage open-source models to lower costs. As their solution scales, these models can be hosted on cloud services for improved integration and easier sharing.

Moreover, an effective entry strategy could enrich your current apps with AI capabilities, thus strengthening your core business offerings. While owning proprietary data can be advantageous for refining your machine-learning model, but requires more capital expenditure. Thus, striking a balance between leveraging existing resources and investing in new assets is key to achieving success in generative AI.

Unleash the potential of your business with a Krasamo MLOps team! Our experts offer comprehensive machine learning consulting, starting with a discovery call assessment to identify your needs and opportunities and map the path to your success.