Table Of Content

- The Vital Importance of Software Testing

- The Potential of LLMs in Automating Software Tasks

- Testing and Evaluations Using LLMs

- Automating Test Case Generation with LLMs

- Practical Implementation of LLMs in Software Testing

- Security Enhancements and Debugging Capabilities

- Integrating LLMs with CI/CD Pipelines

- Conclusion

- Engage with Our Experts

The relentless evolution of software development demands equally dynamic approaches to quality assurance. In this context, software testing is not just a phase in the Software Development Life Cycle (SDLC) but a pivotal component that ensures software safety, functionality, and performance before it reaches the market.

Traditionally focused on identifying defects and ensuring compliance with specifications, today’s testing paradigms are being transformed by the introduction of Large Language Models (LLMs). These advanced AI systems offer unprecedented capabilities in automating and enhancing testing tasks, thus integrating deeply into the SDLC to significantly boost efficiency and market responsiveness.

The Vital Importance of Software Testing

Software testing serves multiple vital functions, from quality assurance and risk management to enhancing user satisfaction and ensuring cost efficiency. By rigorously validating each software module against defined criteria, testing helps catch potential failures early, safeguarding the developer’s reputation and reducing costs associated with post-release fixes. Comprehensive testing across the SDLC stages facilitates a smoother transition from development to deployment, ensuring each application part is fully prepared for the next stage or final release.

The Potential of LLMs in Automating Software Tasks

Integrating LLMs into software testing workflows presents a unique opportunity to streamline and refine test scenario creation, leading to significant business benefits:

- Automated Test Case Generation: LLMs, such as OpenAI’s GPT models, can automatically generate extensive test cases, scripts, and significantly assist in security analysis by suggesting vulnerabilities and generating security-focused test scenarios through understanding and processing natural language inputs. This automation significantly reduces the time and effort required to create test cases manually, resulting in faster development cycles and more efficient resource allocation. A multi-step filtration process, like the one used by TestGen[1], validates the generated test cases.

- Enhanced Test Coverage: LLMs excel in identifying nuanced edge cases and potential security gaps that traditional methods might overlook, thereby significantly enhancing test coverage and application robustness. By catching issues early in the development process, organizations can reduce the costs associated with fixing defects in later stages or after release. Developers evaluate success metrics such as the percentage of test cases successfully built and passed. Also, showing quantifiable improvements in test coverage and the detection of previously unnoticed bugs.

- Enhanced Debugging Capabilities: Integrating LLMs into debugging can revolutionize detecting and resolving software defects. By automatically analyzing code to identify inconsistencies and potential errors, LLMs can suggest precise areas of concern that need attention, thereby accelerating debugging. Moreover, LLMs can be trained to suggest fixes based on common patterns they learn from vast code datasets. This speeds up debugging and helps maintain code quality throughout the development phases.

- Streamlined Workflows: LLMs’ automation capabilities in generating test cases and identifying potential issues free developers and testers to focus on higher-level tasks and strategic decision-making. This optimization of human resources contributes to faster development times and allows teams to innovate and deliver value to the business more quickly.

- Improved Software Quality: LLMs’ increased test coverage and accuracy directly contribute to higher-quality software. Organizations can deliver more reliable and performant applications by identifying and addressing a wider range of potential issues, improving customer satisfaction, and reducing reputational risks.

- Cost Reduction: Implementing LLMs in software testing can lead to significant cost savings. Automated test case generation reduces the need for manual testing efforts, while early defect detection minimizes the costs associated with fixing issues in later stages. The improved software quality resulting from LLM-driven testing can reduce maintenance and support costs over the application’s lifecycle.

Testing and Evaluations Using LLMs

When integrating large language models (LLMs) into software testing frameworks, it is critical to distinguish between ‘testing’ and ‘evaluations’ [2]. While both are integral to software quality assurance, they serve different functions.

Testing with LLMs primarily focuses on verifying the correctness and functionality of the software that interacts with or incorporates the LLM. This involves rigorous checks to ensure the code performs as expected under various conditions, proving the software’s reliability and operational readiness.

In practical terms, LLMs can be applied across various types of testing, each serving unique purposes within the software development lifecycle:

- Unit Testing: Automating the creation of unit tests that check individual components for correct behavior.

- Functional Testing: Generating comprehensive test cases that validate the functionalities against the specifications.

- Integration Testing: Assisting in creating test cases that ensure modules or services work together as expected.

- Regression Testing: Quickly generate tests to cover new changes and ensure they do not disrupt existing functionalities.

- Sanity Testing: Involves leveraging LLMs to quickly check specific functionalities after minor software changes, ensuring that recent updates have not destabilized essential features.

- Performance Testing: Simulating different usage and load conditions to predict how changes impact system performance.

On the other hand, evaluations delve into assessing the quality and effectiveness of the outputs generated by LLMs. This process evaluates how well the LLM’s responses align with the specific needs and objectives of the application, going beyond mere functionality to assess contextual appropriateness, relevance, and even the creative quality of content produced by the LLM. Such evaluations are critical as they measure:

- Output Quality Assessment: The precision, relevance, and practicality of LLM outputs against established benchmarks.

- Model Graded Evaluations: The subjective quality of outputs where correctness may vary based on use-case specifics.

- Bias and Fairness Checks: Ensuring outputs are free of biases that could skew user interactions or decisions.

- Robustness and Reliability: The LLM’s performance across varied and unforeseen scenarios to ensure robustness against potential exploitation.

Understanding and implementing testing and evaluations are vital for effectively harnessing LLMs within the software development lifecycle, enhancing product quality and development teams’ innovative capacity.

Automating Test Case Generation with LLMs

LLMs revolutionize test case generation by converting acceptance criteria directly into executable test code. This automation extends beyond simple test creation—it includes the generation of detailed, context-aware test scenarios that cover a broader spectrum of potential system behaviors, including edge and corner cases. By reducing the manual effort required in crafting these tests, LLMs free up developers and testers to focus on higher-level strategy and design considerations, thereby accelerating development cycles and improving overall software quality.

Practical Implementation of LLMs in Software Testing

Implementing LLMs for test case generation encompasses several key steps. Initially, training is crucial, as LLMs must be tailored with domain-specific data sets to accurately understand and respond to complex requirements. Integrating LLM-generated test cases into existing test management systems is essential for ensuring these automated cases are effectively utilized alongside traditional methods. Finally, Continuous Learning enables LLMs to adapt and refine their test-generating algorithm and output based on ongoing feedback and testing results, thereby perpetually enhancing the quality and relevance of the test scenarios they generate.

When writing code for testing and automation, developers often need to create compilation files and write unit tests, which can be time-consuming. Many developers claim they spend at least 30% of their time on these tasks.

Security Enhancements and Debugging Capabilities

One of the standout capabilities of LLMs (Large Language Models) is their application in security testing. Trained on diverse, security-focused datasets, these models can proactively develop test cases that address potential vulnerabilities, a critical advantage, given the increasing sophistication of cyber threats. Additionally, LLMs in CI/CD pipelines contribute to more effective debugging by automatically diagnosing and logging errors during test runs. This allows for rapid resolution of issues that could otherwise delay deployment.

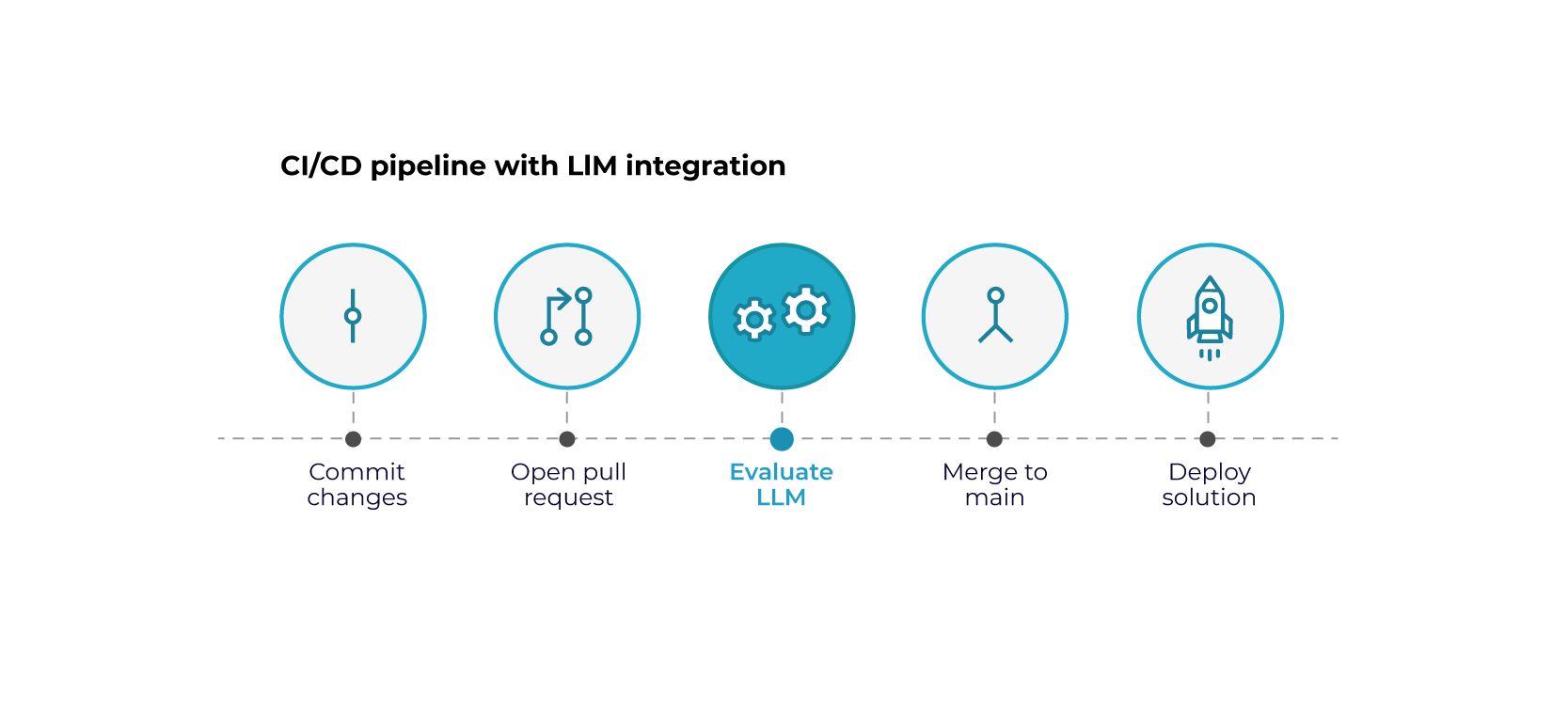

Integrating LLMs with CI/CD Pipelines

Establishing a robust Continuous Integration/Continuous Deployment (CI/CD) process is essential in modern software development. Integrating CI/CD frameworks accelerates the development cycle and enhances the effectiveness of testing strategies, especially when combined with the capabilities of large language models (LLMs).

The CI/CD pipeline facilitates frequently merging code changes into a central repository, where each update—refining LLM prompts or integrating new data sets—is automatically built and tested. This setup is crucial when deploying LLMs, as it allows for continuously validating AI-generated outputs against expected behaviors and standards.

Automated tests within the CI/CD framework quickly pinpoint issues such as output biases or inaccuracies, ensuring errors are corrected before they impact the broader application.

The ultimate goal of integrating LLMs with CI/CD is to enable a very high degree of automation, facilitating near-zero manual intervention for production readiness in many contexts, ensuring that every piece of code, once merged and tested, is production-ready. Developers integrating LLMs into CI/CD pipelines streamline testing processes, featuring test build automation and real-time error detection.

Conclusion

The advent of LLMs marks a significant milestone in software testing. LLMs streamline workflows and introduce higher precision and comprehensiveness in testing activities by automating and enhancing traditional testing methods. For businesses, this translates into faster development times, cost reductions, and the deployment of higher-quality software. As these technologies evolve, their integration into standard testing practices redefines the quality benchmarks in software development, establishing a new era of efficiency and innovation in the industry.

Engage with Our Experts

Are you ready to elevate your software testing strategy and harness the cutting-edge capabilities of large language models? At Krasamo, our dedicated developers and AI consultants are poised to help you integrate these transformative technologies into your operations. By partnering with us, you will streamline your testing processes and enhance your software development lifecycle’s overall quality and efficiency.

Contact us today to discuss how we can augment your team and guide you through successfully adopting AI and LLMs tailored to your business needs. Let’s innovate together and set new benchmarks in software excellence.

References:

[1] Automated Unit Test Improvement using Large Language Models at Meta

[2] Software Testing with Large Language Models: Survey, Landscape, and Vision

0 Comments